Using lessons learnt from eye imaging, researchers at Duke University have developed a high-precision, high-speed LiDAR (light detection and ranging) system that they claim is 25 times faster than previous LiDAR demonstrations. This provides the system with a frame rate comparable to that of video cameras, greatly improving its potential for use in imaging systems for autonomous technologies such as driverless cars and robots.

Real-time, high-resolution 3D imaging is desirable in many fields, including biomedical imaging, robotics, virtual reality, 3D printing and autonomous vehicles. Currently, a lot of the attention for autonomous systems focuses on LiDAR. Most LiDAR systems are based on time-of-flight: they send out laser pulses and measure the time it takes for reflections to return, using that information to map their surroundings.

In recent years, to try to improve the speed of LiDAR and reduce errors caused by ambient light, researchers have been looking at frequency-modulated continuous wave (FMCW) LiDAR. This technique is like traditional LiDAR, but the system continuously changes, or modulates, the frequency of the laser. When the reflected light returns, as well as measuring the time taken, the system also analyses how its phase has shifted. This provides a far more accurate measure of distance. The frequency pattern created by the modulated laser also makes it easier for the system to distinguish the reflected laser light from other light sources.

FMCW LiDAR shares similar working principles with the medical imaging technique swept-source optical coherence tomography (OCT). Swept-source OCT is primarily used in ophthalmology to obtain high-resolution cross-sectional images of the retina. The technique uses a laser that sweeps over a range of frequencies to achieve 3D volumetric imaging of the back of the eye with a depth of several millimetres.

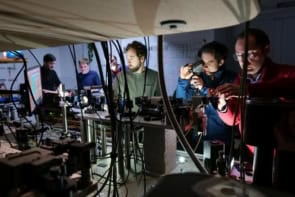

In recent years, several developments have helped increase the range, speed and resolution of swept-source OCT. In their latest work, biomedical engineer Joseph Izatt and his colleagues applied some of the lessons they have learnt with OCT to create high-speed and high-precision FMCW LiDAR. They discuss their results in Nature Communications.

First, the researchers used an akinetic all-semiconductor sweep source. This does away with any form of mechanical movement in the mechanism that creates the sweep of laser frequencies. In the past, techniques like this have been shown to extend the imaging range of OCT and FMCW LiDAR.

Many FMCW LiDAR systems have a limited scanning speed due to the mechanical mirrors, or other electromechanical systems, that are used to move the laser beam across the field-of-view. One way to address this, and enable high-speed imaging, is to again shift away from mechanical systems to non-mechanical beam scanners with no moving parts. Izatt and his team used a diffraction grating, which separates the laser into multiple beams of different wavelength, all travelling at different angles away from the source. Along with the sweep source, this creates a laser that scans its environment much faster than is possible with mechanical systems.

While these techniques can improve the range of imaging systems, they impact the depth resolution. OCT systems combat this by using more sampling points, faster photodetectors and longer acquisition times. But LiDAR systems do not need the same depth resolution, as they are not probing structures several millimetres deep in a patient’s retina. They just need to scan an object’s surface.

The researchers found that by reducing the number of spectral sampling points and narrowing the laser’s range of frequencies, they could achieve real-time 3D imaging, with a frame rate as high as 33.2 Hz. With this compressed sampling approach, they demonstrated video-rate imaging of various everyday objects, including a moving human hand, with an imaging range of up to 32.8 cm.

Nonlinear integrated photonics speeds up coherent lidar

The system currently has a few limitations, particularly the short imaging range. This is due to the bandwidth of the available components, but could be extended to about 2 m using a higher-specification commercial photodetector and digitizer. The researchers emphasize, however, that their approach shows the great potential of FMCW LiDAR for a number of imaging applications.

“The biological cell-scale imaging technology we have been working on for decades is directly translatable for large-scale, real-time 3D vision,” Izatt says. “These are exactly the capabilities needed for robots to see and interact with humans safely.” In a 3D world, he explains, “they need to be able to see us as well as we can see them.”